If you're a developer venturing into the world of DevOps, you may have encountered a sea of bewildering jargon and terminology that seems to be spoken in an alien language. Fear not, for this beginner's guide is here to demystify the world of DevOps terminology and equip you with the knowledge you need to navigate this exciting field. So, buckle up and let's go on this journey together!

DevOps

Let's start with the basics. DevOps is a combination of "development" and "operations." It's a cultural and collaborative approach that aims to bridge the gap between software development and IT operations, promoting faster and more reliable software delivery.

DevOps recognizes the need for close collaboration and communication between development teams, who create and modify the software, and operations teams, who manage and maintain the infrastructure on which the software runs. DevOps promotes collaboration between these teams, enabling faster and more reliable software or custom AI solutions delivery.

By integrating development and operations processes, organizations can automate manual tasks, streamline workflows, and reduce the time and effort required to move software from development to production environments. This automation is achieved through the use of various tools and technologies that support continuous integration, continuous delivery, and infrastructure as code.

What's it Like to Be in a DevOps Environment?

In a DevOps environment, developers and operations teams work closely together from the early stages of software development, ensuring that operational considerations are taken into account during the design and implementation of the software. This collaboration allows for the early detection and resolution of issues related to scalability, performance, security, and stability.

DevOps also emphasizes the importance of feedback loops and continuous improvement. By continuously monitoring the performance and reliability of software in production, teams can gather valuable insights and feedback that can be used to identify areas for improvement.

This close collaboration in DevOps encourages the use of agile methodologies and practices. By adopting an iterative and incremental approach, teams can respond quickly to changing requirements and market demands. This flexibility allows organizations to deliver value to their customers more rapidly, adapting to their needs and staying ahead of the competition.

Continuous Integration (CI)

Continuous Integration (CI) is a fundamental practice in DevOps that revolutionizes the traditional software development approach. It addresses the challenge of integrating code changes from multiple developers working on a project by enabling frequent and automated integration of code into a shared repository.

In CI, developers regularly commit their code changes to a central version control system, such as Git. The CI system then automatically triggers a series of actions to build, test, and verify the code changes. These actions may include compiling the code, running unit tests, and performing static code analysis.

The primary goal of CI is to detect integration issues early on in the development process. By continuously integrating code changes, teams can identify and resolve conflicts, dependencies, and compatibility issues before they escalate into larger problems.

Automating the CI process enables teams to achieve consistent and reliable builds. It reduces human error and ensures that the codebase remains in a functional state at all times. By catching issues early and maintaining a stable codebase, CI increases the overall software quality and paves the way for subsequent practices such as Continuous Delivery (CD).

The following are three popular tools that assist with Continuous Integration (CI):

- Jenkins: Jenkins is an open-source automation server widely used for CI. It provides a flexible and extensible platform for building, testing, and deploying software. Jenkins supports integration with various version control systems, build tools, and testing frameworks. It allows you to define pipelines as code using the

Jenkinsfileformat, enabling teams to automate their CI workflows and ensure consistent and reliable software builds. - Travis CI: Travis CI is an open-source CI platform primarily designed for GitHub projects. It offers a straightforward configuration setup using a YAML file (

.travis.yml). Travis CI automatically detects new commits in the repository and triggers build and test processes. It supports a wide range of programming languages and provides integration with popular version control systems and cloud platforms. - CircleCI: CircleCI is a cloud-based CI/CD platform. It supports automating the build, test, and deployment processes for software projects. CircleCI uses a configuration file to define the build steps and environment settings. It integrates with various version control systems and offers extensive support for parallelism and caching to optimize build times.

Continuous Delivery (CD)

Continuous Delivery (CD) is an extension of Continuous Integration (CI) that focuses on automating the deployment process to ensure fast and reliable software releases. While CI focuses on integrating code changes frequently, CD takes it a step further by automating the entire pipeline from code commit to production deployment.

CD eliminates the manual and error-prone steps involved in software deployment by automating various stages of the process. This includes building the software, running comprehensive tests, and deploying it to production or staging environments.

The key benefit of CD is its ability to enable organizations to release software quickly and with confidence. With each successful build and passing test, CD ensures that the software is in a deployable state.

By embracing CD, organizations can achieve a continuous flow of software delivery, responding rapidly to customer feedback and market demands. It also facilitates iterative development and experimentation, as teams can quickly validate changes and gather real-world feedback.

Note: Most of the tools that offer Continuous Integration also offer Continuous Delivery in some form.

Infrastructure as Code (IaC)

Infrastructure as Code (IaC) is the practice of managing and provisioning infrastructure resources through machine-readable definition files. By treating infrastructure as code, it becomes easier to version, track changes, and reproduce environments.

Just like software code, infrastructure code can be stored in a version control system, allowing teams to easily review, rollback, and collaborate on infrastructure changes. This ensures that the infrastructure remains consistent and reproducible over time, enhancing reliability and reducing the risk of configuration drift.

IaC also facilitates the automation of infrastructure provisioning. Instead of manually setting up servers, networks, and other resources, IaC enables the use of configuration management tools to automatically provision and configure infrastructure based on the defined code. This saves time and minimizes human error.

Here are a few open source software tools you can use for IaC deployments:

- Terraform: Terraform is an open-source tool by HashiCorp that allows you to define and provision infrastructure resources across various cloud providers and services. It uses a declarative configuration language and supports a wide range of resources, enabling you to manage infrastructure as code in a consistent and scalable manner.

- Ansible: Ansible is an open-source automation platform that supports infrastructure provisioning, configuration management, and application deployment. It uses a simple YAML-based language called Ansible Playbooks to describe infrastructure configurations and tasks. Ansible is known for its agentless architecture and ease of use.

- Pulumi: Pulumi is an open-source infrastructure as code tool that enables developers to create, deploy, and manage infrastructure using familiar programming languages such as JavaScript, TypeScript, Python, and Go. With Pulumi, you can leverage the power of code to define and manage infrastructure resources across various cloud platforms.

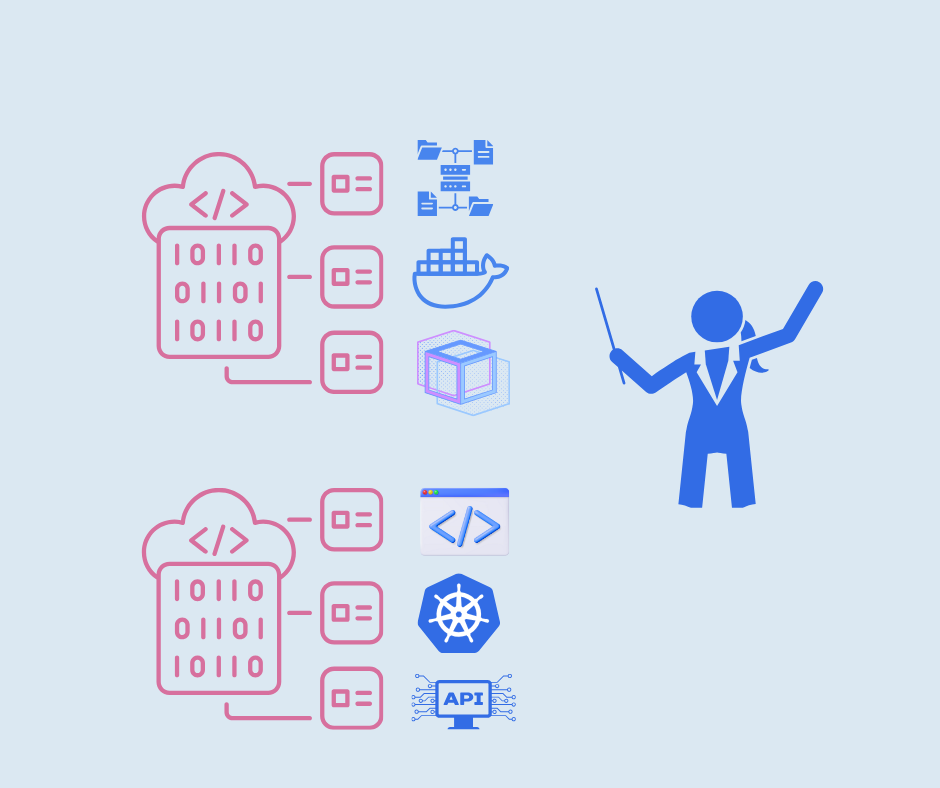

Microservices

Microservices is an architectural style where applications are built as a collection of small, loosely coupled services that can be developed, deployed, and scaled independently. This approach enhances agility, scalability, and fault isolation.

Here are a few examples that illustrate the theoretical benefits of microservices:

- Agility: Suppose a company wants to develop an e-commerce platform. By adopting a microservices architecture, they can build different services for catalog management, user authentication, order processing, payment handling, and more. Each service can be developed and deployed independently, allowing different teams to work on different services concurrently. This promotes faster development cycles, as teams can iterate and release updates to their services without impacting the entire system.

- Scalability: Imagine a social media application that experiences a sudden surge in user activity due to a viral post. With microservices, the application can scale horizontally by adding more instances of the services that are under high demand. For example, if the image upload service is experiencing a heavy load, the company can deploy additional instances of that service to handle the increased demand without affecting other parts of the application. This fine-grained scalability enables efficient resource utilization and improves overall system performance.

- Fault Isolation: In a monolithic architecture, if a single component fails, the entire application may crash. With microservices, failures are isolated to individual services, minimizing the impact on the overall system. For instance, consider a video streaming platform. If the recommendation service encounters an issue and goes down, users can still access and watch videos, as the video playback and user authentication services continue to function independently.

Containers

Containers provide a lightweight and isolated environment for running applications. They encapsulate the application and its dependencies, making it easy to deploy and run consistently across different environments.

Let's say a development team is building a web application. With containers, they can package the application along with all its dependencies, libraries, and configuration files into a single container image. This image can then be deployed and run consistently across different environments, such as development, staging, and production. It ensures that the application behaves the same way regardless of the underlying infrastructure, reducing the chances of deployment-related issues.

Containers provide isolation between applications and their dependencies. Each container runs in its own isolated environment, with its own file system, network interfaces, and process space. This isolation prevents conflicts between applications and ensures that changes made to one container do not affect others.

Containers have become an integral part of modern DevOps practices. They enable developers to package their applications and their dependencies as code, making it easier to version, share, and collaborate on the infrastructure stack. Containers also fit well with continuous integration and continuous deployment (CI/CD) pipelines, as they allow for rapid and consistent deployment of applications.

Docker is the go-to tool for managing containers. It is a popular open-source platform that simplifies the creation, deployment, and management of containers, making it widely used in the containerization ecosystem.

Orchestration

Orchestration refers to the automated management and coordination of containers or services within a larger system. It ensures that containers are deployed, scaled, and connected correctly, allowing for efficient utilization of resources.

An example of a widely used orchestration tool is Kubernetes. Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It provides a rich set of features, such as service discovery, load balancing, self-healing, and horizontal scaling, to ensure the efficient utilization of resources and seamless coordination of containers.

With Kubernetes, users can define the desired state of their application and let the platform handle the underlying infrastructure, automatically managing container placement, scaling, and network connectivity.

For instance, let's consider a scenario where a web application consists of multiple microservices running in separate containers. Kubernetes can be used to orchestrate these containers by ensuring the desired number of replicas of each microservice are running, distributing the load across them, and automatically scaling up or down based on demand. Kubernetes also takes care of networking, allowing the containers to communicate with each other seamlessly.

Continuous Monitoring

Continuous monitoring involves collecting and analyzing real-time data about the performance and health of applications and infrastructure. It enables proactive identification and resolution of issues, improving system reliability.

For example, consider a cloud-based application that serves a large number of users. Continuous monitoring can track various performance metrics such as response times and error rates. If the monitoring system detects a sudden increase in response times or a spike in error rates, it can trigger automated alerts or notifications to the operations team. The team can then investigate the issue promptly, identify the root cause, and take appropriate actions to mitigate the problem. This proactive approach helps ensure that the application remains responsive and stable for users.

Version Control

Version control is a system that tracks and manages changes to source code over time. It allows developers to collaborate, revert to previous versions, and maintain a history of modifications.

Git is the most widely used distributed version control system. It provides a powerful and efficient way to track changes, branch code, and collaborate with others. Understanding Git is essential for effective code management in DevOps. To learn Git, checkout the official Git website.

Deployment Pipeline

A deployment pipeline is a sequence of automated stages that software goes through before reaching production. It typically includes building, testing, and deploying code, with each stage serving as a gate for quality control.

The deployment pipeline starts with the build stage, where the source code is compiled, dependencies are resolved, and artifacts are generated. This stage ensures that the software is correctly compiled and ready for further testing and deployment.

Next, the code moves to the testing stage. This stage includes various types of tests, such as unit tests, integration tests, and acceptance tests. The purpose is to validate the functionality, performance, and reliability of the software. Automated testing tools and frameworks are often used to execute these tests and provide feedback on the code's quality.

After the testing stage, the code proceeds to the deployment stage. Here, the software artifacts are deployed to the target environment, such as a staging or production server. This stage includes activities such as provisioning resources, configuring the environment, and deploying the code. Automated deployment tools and technologies facilitate the smooth and consistent deployment of the software.

Throughout the deployment pipeline, each stage acts as a gate that must be passed successfully before the software can progress to the next stage. This gatekeeping ensures that quality checks are performed at every step.

Infrastructure Orchestration

Infrastructure orchestration focuses on automating the provisioning, configuration, and management of infrastructure resources.

Tools like Terraform and AWS CloudFormation are commonly used for infrastructure orchestration. Terraform is an open-source infrastructure as code (IaC) tool that allows users to define and manage infrastructure resources declaratively. It supports multiple cloud providers and infrastructure platforms, enabling users to define their desired infrastructure state in a configuration file. Terraform then provisions and configures the resources accordingly, making it easier to manage infrastructure changes and deployments across different environments.

AWS CloudFormation, on the other hand, is a service provided by Amazon Web Services (AWS) specifically for infrastructure orchestration. It allows users to define their infrastructure resources using JSON or YAML templates. CloudFormation then handles the provisioning and management of these resources, ensuring the desired infrastructure state is maintained. CloudFormation also supports the ability to create, update, and delete infrastructure stacks, making it easy to manage complex infrastructure setups and deployments in AWS.

For example, let's consider an organization that wants to provision a web application infrastructure on a cloud platform. Using infrastructure orchestration, they can define their infrastructure requirements using Terraform or CloudFormation templates. The templates can specify resources like virtual machines, load balancers, databases, and networking configurations. When executed, the orchestration tool takes care of provisioning and configuring the infrastructure resources based on the defined templates. This automation eliminates the need for manual setup and reduces the chances of configuration errors, and enabling infrastructure-as-code practices, allowing infrastructure configurations to be version-controlled, tested, and easily reproducible.

Scalability

Scalability refers to the ability of a system to handle increased workload or growing demands. DevOps enables organizations to build scalable systems by employing techniques like horizontal scaling and load balancing.

Horizontal scaling involves adding more resources, such as servers or instances, to distribute the workload across multiple machines. This approach allows organizations to handle increased traffic or workload by leveraging additional computing power. By scaling horizontally, system performance can be improved, and the system's capacity to handle concurrent requests or process large amounts of data can be increased.

Load balancing is another key technique employed in scalable systems. It involves distributing incoming requests or network traffic across multiple servers or computing resources to optimize resource utilization and ensure even distribution of workloads. Load balancers act as intermediaries between clients and the system's resources, intelligently routing requests to different servers based on factors such as server availability, response times, or predefined rules. Load balancing helps to prevent any single server from becoming overwhelmed and ensures that the system can efficiently handle increasing traffic or demand.

High Availability

High availability ensures that systems remain operational and accessible even in the face of failures or disruptions. DevOps practices help achieve high availability through redundancy, fault tolerance, and automated failover mechanisms.

Redundancy is a key aspect of high availability. It involves duplicating critical components or resources in a system to create backups or alternatives. By having redundant components, such as servers, databases, or networking infrastructure, organizations can mitigate the impact of failures. If one component fails, the redundant one can take over seamlessly, ensuring uninterrupted service.

Fault tolerance is another important aspect of high availability. It refers to the ability of a system to continue operating despite the occurrence of faults or failures. DevOps practices promote building fault-tolerant systems by employing techniques such as error handling, graceful degradation, and automated recovery.

Automated failover mechanisms are essential for achieving high availability. When a failure occurs, an automated failover mechanism detects the failure and triggers the necessary actions to restore the system to a working state. This can involve shifting the workload to a redundant system, initiating data replication, or redirecting traffic to an alternate server or data center.

Incident Management

Incident management is the process of detecting, responding to, and resolving incidents that impact system performance or availability. It often involves timely communication, root cause analysis, and implementing preventive measures.

Timely communication is essential during incident management. When an incident occurs, it is crucial to promptly notify the responsible people, including the incident response team, relevant technical staff, and any other individuals who need to be informed. Effective communication ensures that the incident is properly escalated, and the necessary resources and expertise are mobilized to address the issue.

Root cause analysis is a critical aspect of incident management. After an incident is resolved, it is important to conduct a thorough investigation to identify the underlying cause or causes. This is often done by examining logs.

Implementing preventive measures is an integral part of incident management. Once the root cause is identified, organizations can take proactive steps to prevent future incidents. Mainly by making system configuration changes, improving monitoring and alerting mechanisms, enhancing security measures, or revising processes and procedures. This reduces the likelihood and impact of incidents, leading to improved system performance and availability.

DevSecOps

DevSecOps integrates security practices into the DevOps workflow. It emphasizes the collaboration between development, operations, and security teams to address security concerns throughout the software development lifecycle.

In traditional software development processes, security measures are often implemented as an afterthought or considered only during the later stages of development. However, DevSecOps recognizes the importance of early and continuous security integration. By integrating security practices from the beginning, organizations can create a culture of security awareness and ensure that security is a shared responsibility across teams.

Conclusion

As a developer delving into the world of DevOps, familiarizing yourself with these key terms will empower you to communicate effectively and comprehend discussions within the DevOps ecosystem. Remember, this is just the tip of the iceberg, and the world of DevOps is vast and ever-evolving. Embrace the learning journey, stay curious, and explore the vast opportunities that DevOps brings to the table. Happy coding!